|

|

|

Teams, Safety Prompts, Better Context & Macro-AgentNovember 24, 2025Teams are live. Safety prompts and context improvements are rolling out now. Introducing Teams on AlmmaGPTTeams are live! Teams let groups centralize billing, so coworkers and collaborators can enjoy all the benefits of AlmmaGPT under a single unified billing account and share savings across the entire organization. How Teams work — quick startSet up a Team in minutes and bring your group into a shared AlmmaGPT subscription. Centralized controls make administration and billing simple. 1

Go to Profile and select ‘My Teams’

2

Create your first Team.

3

Name your Team.

4

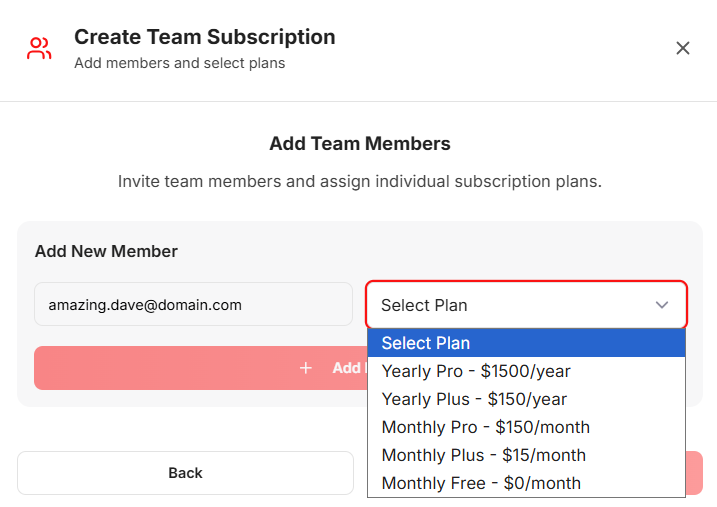

Add each team member’s email and select their plan. At least one member must have a paid account. You can choose monthly or yearly payments. Stripe will handle invoicing and proper billing.

5

You can complete the payment securely on Stripe. Your teammates will receive an invitation to join your AlmmaGPT team. We are adding two system prompts: Dignity & No-hallucination to make AlmmaGPT a safer platform.

To improve safety and trust across the platform, we’re adding two system prompts that will pre-empt every conversation on AlmmaGPT: 1

Dignity-first prompt — Instructs LLMs to respect the dignity of direct and indirect users of their outputs: avoid harm, discrimination, and content that undermines human dignity.

2

No-hallucination & sourcing prompt — Requires models to avoid inventing facts, provide sources for assertions, and explain any estimations or assumptions used in responses. When an estimate is given, the model will show the reasoning and steps behind it.

These prompts are baseline guardrails and will apply across public chats, Teams, and family accounts. Stronger context: Personal preferences become memories

Context is the keystone of useful LLM outputs. Better context means fewer follow-ups, more accurate answers, and faster outcomes. On AlmmaGPT, we’re improving context handling by automatically storing user preferences as editable memories. Those memories will help each session start closer to what you need — without repeating the same preferences each time. Keeping AlmmaGPT Safe: Anti‑Abuse MeasuresWe want AlmmaGPT to be a safe, productive place for everyone — creators, families, and professionals. To protect the community, we use a combination of automated safeguards and human review to stop abuse before it spreads. These measures keep the marketplace fair, protect minors, and ensure creators’ work isn’t misused. 1

Proactive protections — automated rate limits, content filters, and anomaly detection help prevent spam, excessive automated scraping, harassment, and other misuse.

2

Graduated responses — most incidents trigger friendly warnings and temporary limits. Only repeated or severe abuse leads to stronger actions. This approach helps well‑intentioned users quickly correct their behavior.

3

Clear feedback — when you approach a limit or trigger a filter, the system will warn you with an explanation and next steps so you can adjust your use without surprises.

4

Human review & appeals — suspicious cases escalate to our moderation team for review. If you believe a limit or action was applied in error, you can contact support and request an appeal by clicking the Help icon in the bottom right of your screen and opening a ticket.

These protections make AlmmaGPT safer and more reliable for everyone. If you have questions about a warning or want guidance on best practices, visit our Help Center or contact support — we’re here to help. Coming Soon!AlmmaGPT as a Macro Agent: smarter prompting & routingWe are building AlmmaGPT into a Macro Agent. Using our proprietary ranking algorithm, AlmmaGPT will soon: 1

Suggest the best LLM (or combination of LLMs) for the task you’re doing.

2

Recommend marketplace products that match your brief and workflow.

3

Offer to find a Creator in our community to build a custom solution if nothing exact exists.

This makes AlmmaGPT not just a place to run prompts, but a guide to pick the right tools and people for the job. Family-friendly Teams & accounts for minorsBuilding on Teams, we envision helping families bring their entire household on board. Adults will be able to create accounts for minors; flagged minor accounts will include system prompts configured to produce age-appropriate outputs aligned with family safety settings. Almma continues growingAlmma is expanding. Lucas has been extending our reach by participating in recent conferences in Rome and at the Builders of AI Forum — sharing our vision for accessible, responsible AI and forming partnerships that accelerate product development and community growth. Click to watch the video recap. Research recognitionOur paper, “Unlocking $823 Billion in U.S. GDP: Task-Specific AI Agents as a Solution to Vacancy-Induced Output Loss,” has been ranked among the top downloads and top papers in several SSRN categories — highlighting the need for constructive, task-specific AI approaches that increase economic productivity while protecting users. Happy Thanksgiving!We’re deeply grateful you chose to use generative AI with us — your trust drives our work to make AI more accessible, fair, and valuable for all.

|

|

Leave a Reply